It’s not news that AI is hot nowadays, but integrating it into our daily tools in a way that’s actually useful is still a work in progress. Are they listening for a key phrase to be utterred, or a floating button waiting to be clicked? With the release of Copilot Vision, Microsoft offers a different way for its smarter GenAI system Copilot to be tightly integrated into Microsoft Edge, and therefore your daily Web browsing adventures. Here’s how it works…

Microsoft Copilot started life as a branded version of ChatGPT but has since grown to have more features, capabilities, additional large language models (LLMs), and a better structure for data confidentiality and privacy than the default OpenAI utility. Copilot has also become deeply embedded into just about every Microsoft product, from Word to Excel to Teams. Where it sees its most visibility, however, is in its ubiquitous integration into Windows 11 and the Microsoft Edge browser. Edge is built atop Chromium, the open source heart of Google Chrome, but if you’re running Chrome, you have Google Gemini AI instead of Microsoft Copilot.

Not so easy to keep up with all of these programs. Then there are the many other quite capable programs that can act as helpers, including ChatGPT itself, Claude, and Perplexity. A click or keyboard shortcut and they’re all ready to process your requests or act on your prompts. Darn helpful if you use them, and minimally disruptive if you prefer to ignore them while working, studying, or playing.

Shortcuts: Copilot Vision | Getting Started with Vision | Vision in Action | What You Can Do With Vision

Getting the interface right is tricky, however, as indicated by the wide range of different options available. That’s where Microsoft’s latest release of the Edge browser is really slick: It not only introduces Copilot Vision, but makes utilizing it super easy too. Even as it has the standard Copilot icon on the toolbar along the top of the browser.

WHAT’S NEW IN COPILOT VISION

Your question at this point is probably vision? what’s new with Copilot “vision”?

What better way to find out than to ask Copilot itself? Here’s its explanation:

Copilot Vision enhances the standard Copilot experience in Microsoft Edge by adding several new features and capabilities:

- Visual Context Understanding: Copilot Vision can analyze and understand the content of web pages you’re viewing. It can describe images, interpret charts, and provide context for complex visuals.

- Conversational Interaction: Unlike the standard Copilot, Vision can engage in natural language conversations about the content on the page. It can answer questions, provide summaries, and offer insights based on what it sees.

- Task Assistance: Vision can assist with tasks like planning a visit to a museum, helping with holiday shopping by pointing out relevant products, or even explaining how to play a new game.

- Multi-modal Processing: Combining visual and language processing, Copilot Vision provides more comprehensive and insightful responses.

- Opt-in Feature: Copilot Vision is entirely opt-in, meaning you decide when to activate it.

Copilot Vision makes browsing more interactive and helpful by acting as a “second set of eyes” as you navigate the web.

Worth highlighting is that it’s only available to a limited number of Pro subscribers through Copilot Labs at this point so you might not see it in your updated copy of Microsoft Edge quite yet. But let me show you how it works so you can look forward to when it does appear in your own copy of Microsoft Edge…

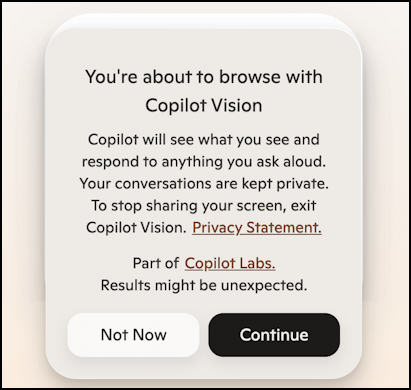

I updated my own Microsoft Edge browser on my MacBook Pro to version 133.0.3065.59 and here’s what I saw on restart:

The big update is that it can now see and process the Web page you’re viewing in the browser.

But first, a privacy disclaimer…

Didja catch that extra bit about it responding to anything you ask? Yes, it’s a chatty voice interface (if you want) which is fantastic. Imagine staring at a boring document or contract in your browser and being able to ask Copilot Vision “hey, what’s important on this page?” or “summarize the terms” or “compare this to standard contracts and tell me what’s different and significant”.

GETTING STARTED WITH COPILOT VISION

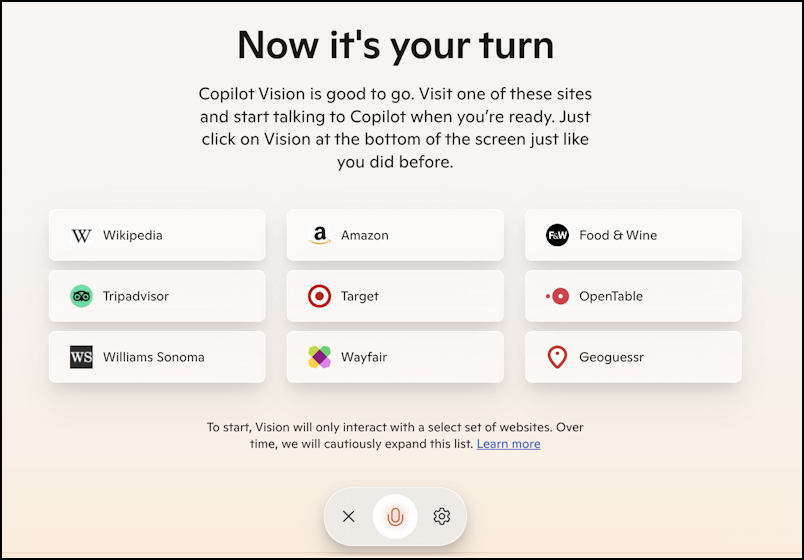

Once you agree to the privacy terms by clicking on “Continue“, you’ll get a bit more info about what’s new:

Students, imagine being able to just ask “what’s important on this page” when viewing a dense, information-packed Wikipedia entry, or asking “do they have any hello kitty t-shirts?” while on Amazon or the Target website.

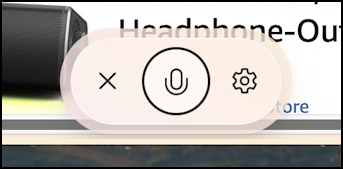

Notice here the floating window at the bottom. That’s Copilot Vision in voice mode and the microphone being a sort of glowing beige means it’s listening. No typing required, just talk to it. Or click the “X” to close it and go back to your regular tasks.

COPILOT VISION IN ACTION

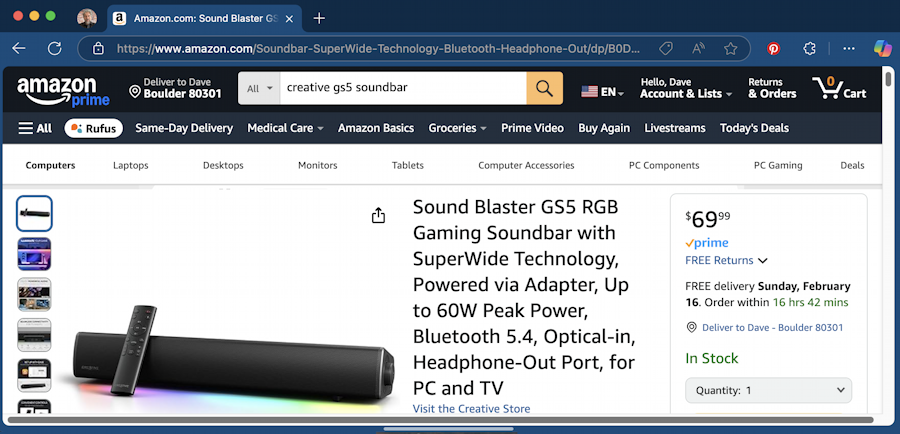

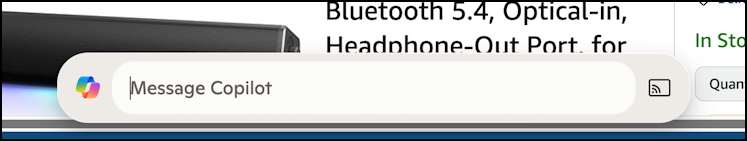

Once you close the demo prompt, Vision drops down to its übersubtle form along the very bottom of the browser. Can you see it in this screenshot from Amazon?

Look very closely at the bottom dark blue and you’ll notice a short grey bar tucked away. That’s the hotspot for waking up Copilot Vision. Simply hover your cursor over it…

Click on that and this floating mini-window appears:

Here you can type in a query about the current page you’re viewing (or any other Copilot query, of course). But where it really gets fun is if you click on the icon on the right side. That brings up voice mode:

Compare this icon with the one shown earlier and you can see what I mean about the “glowing beige”. It indicates that Copilot is listening, rather than the black outline without the glow that generally means Copilot is talking to you.

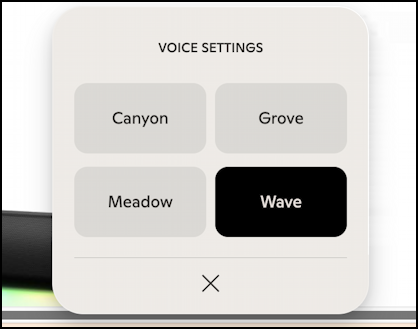

Click on the gear icon and you can pick from four different voices, two deeper and more masculine, two higher pitched and more feminine:

As an aside, props to Microsoft for having Wave, a masculine voice, as the default. That’s a bigger topic, so I won’t go further down this particular rabbit hole other than to offer kudos to the Microsoft team that made this decision.

WHAT CAN YOU DO WITH COPILOT VISION

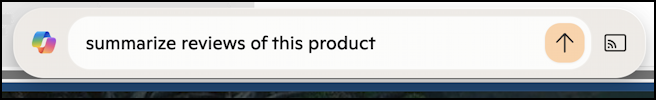

Now that Vision’s up and ready to chat – or read whatever prompt I type in – what can it do now that it can read Web pages? Here’s a great prompt:

This shows me typing in the query, but I actually used voice mode and just asked Vision to summarize the reviews, which it promptly told me with its surprisingly human and lifelike voice. No typing, just chatting with it, and after every response, it waits for my next query, which might be to ask more information about a specific feature or a completely different query.

In practice, chatting with the Web browser is quite amazing and suggests a completely different way to interact with the endless deep well of information and content on the Internet. I am an instant fan.

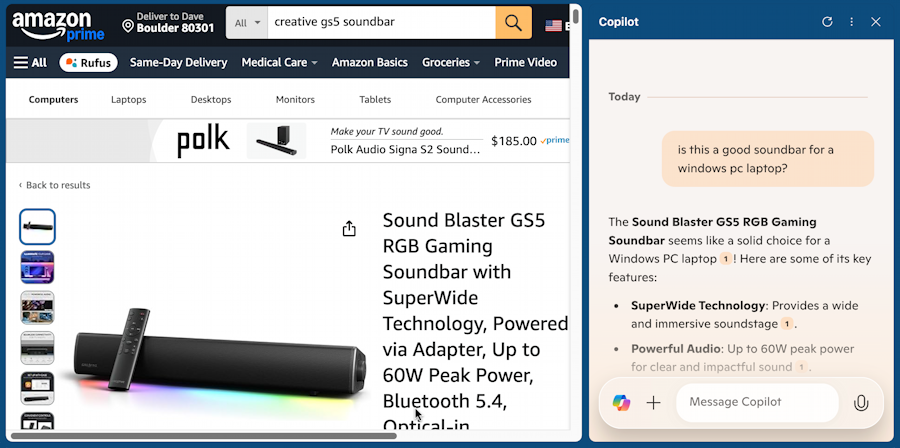

If you type in your query, it’ll pop open a sidebar. I asked Vision “is this a good soundbar for a windows PC laptop?” and you can read its response based on the specs and reviews on the Amazon page:

To be fair, none of this is revolutionary since GenAI programs like Copilot, ChatGPT, Perplexity, et. al. have had voice interfaces for a while now, and there are other GenAI utilities that can read and analyze Web pages (not to mention Rufus, Amazon’s AI program that’s woven into the Amazon shopping experience). What’s new is how you work with Copilot Vision through the omnipresent bar neatly tucked into the bottom of every browser window. It makes it incredibly convenient.

Suffice to say, Copilot Vision is another step on the road to not just integrating GenAI into our everyday work, but doing so in a simple and convenient manner that makes it a no-brainer to play and experiment. No question “tell me about this page” is so much nicer than reading a 1200-word article. Like this one! What does Copilot Vision think about this article? Now you can find out…

Pro tip: I’ve been writing about AI and its many uses for quite a while. While you’re here I invite you to check out my AI help library for more useful tutorials!